Building the Guardrails Before Anyone Asked for Them

Company: Okta | Timeline: FY25–FY26 | Scope: Cross-functional (Security, Legal, Privacy Ops, Finance)

AI governance · Emerging technology policy · Cross-functional leadership · Change management

Most teams were either ignoring AI or waiting for someone else to figure it out.

By early FY25, AI tools were proliferating across Okta's research workflows—but without guardrails. Researchers were experimenting in isolation, unsure what was sanctioned. Security had concerns. Legal had questions. And the research team was caught between curiosity and caution.

There were no existing guidelines. No precedent internally. The industry itself was still figuring it out. The risk wasn't just misuse—it was paralysis. Teams that could benefit from AI augmentation were stalling because nobody had defined what "responsible use" looked like.

I partnered with Security, Legal, and Privacy before they had to come to me.

Rather than waiting for a compliance mandate, I proactively engaged stakeholders across Security GRC, Legal, Privacy Operations, and Enterprise Service Management. This wasn't about drafting a policy document in isolation—it was about building co-ownership from the start.

I facilitated cross-functional workshops to identify risk thresholds, mapped existing data handling procedures to AI-specific scenarios, and established baseline criteria for tool approval. The framework was designed to guide rather than gatekeep—enabling teams to experiment with confidence while maintaining accountability.

"Jared never shies away from challenges or 'red tape,' consistently tackling requests with diligence, no matter how impossible they seem at Okta." — Feedback, UX Researcher

A tactical guide that people actually use.

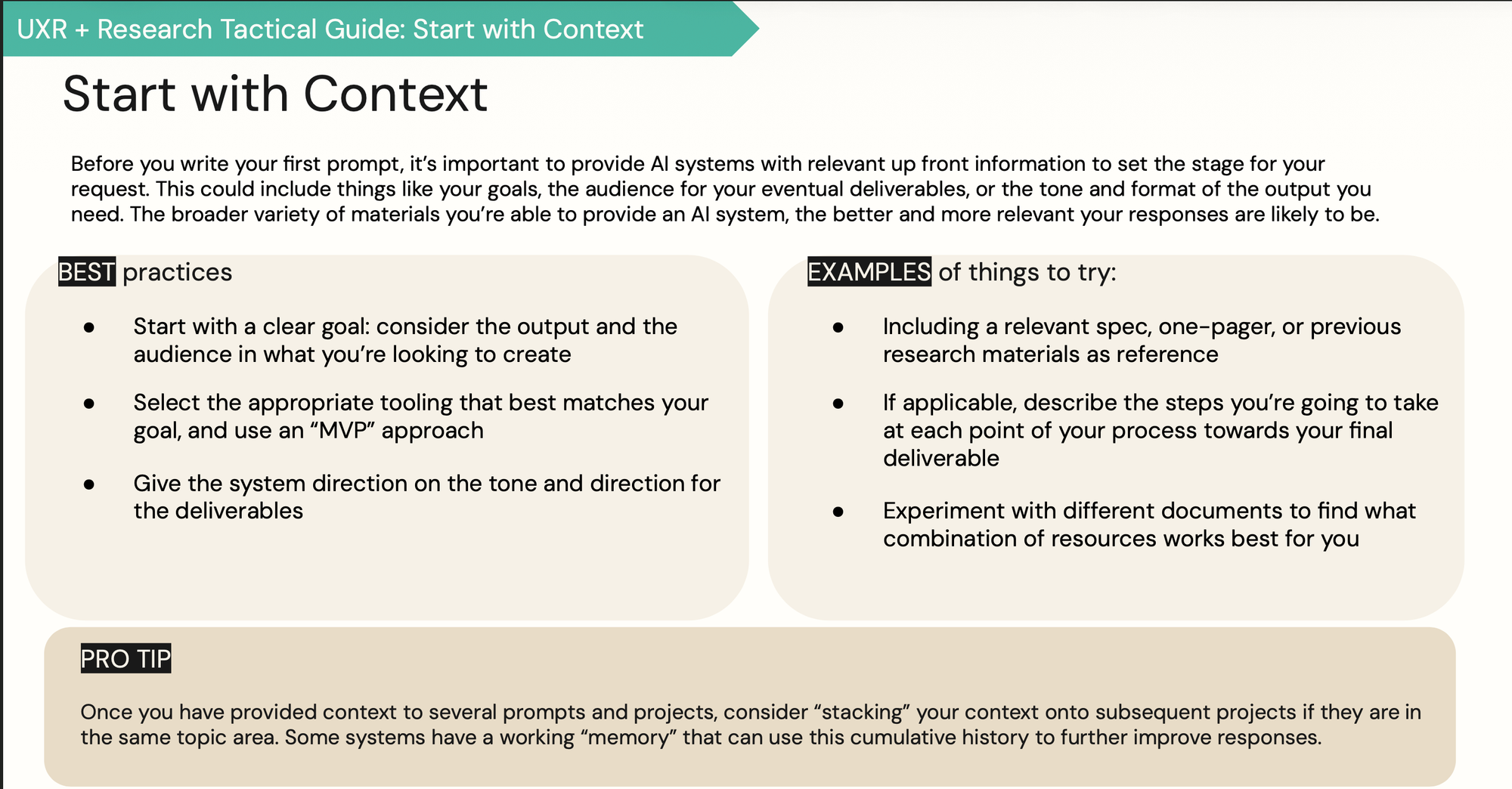

The output wasn't a 50-page policy binder. It was a practical, tactical AI research guide—clear enough for a researcher to reference mid-project, specific enough to satisfy Security's requirements. It covered tool vetting criteria, data handling protocols for AI-assisted analysis, and decision frameworks for when AI augmentation is (and isn't) appropriate.

"Jared is a truly innovative force within the research team. He had a remarkably early and clear vision for how AI could add value to our research process, from analysis to stakeholder insight absorption. He has a unique talent for breaking down complex problems, such as AI integration or insights distillation, into manageable, collaborative workshops that empower the team without overwhelming our daily tasks."

— Feedback, UX Researcher

I also developed enablement workshops to build team confidence—not just compliance. The goal was shifting the culture from AI skeptics to curious practitioners who understood the boundaries and felt empowered within them.

From internal framework to industry stage.

The work earned external recognition. I joined a panel at Dovetail's Insight Out 2025 conference (April 2025, Fort Mason, San Francisco)—"Mediated Meaning: AI's Role in the Research Craft"—alongside Kaleb Loosbrock (Staff Researcher, Instacart) and Sam Ladner (research consultant and author), moderated by Alissa Lydon.

These weren't just speaking opportunities—they positioned Okta as a thought leader in responsible AI adoption within research operations, and validated the governance-first approach I'd built internally.

The framework continues to evolve. As new tools and capabilities emerge, the governance model scales with them—because it was built on principles and partnerships, not a static checklist.